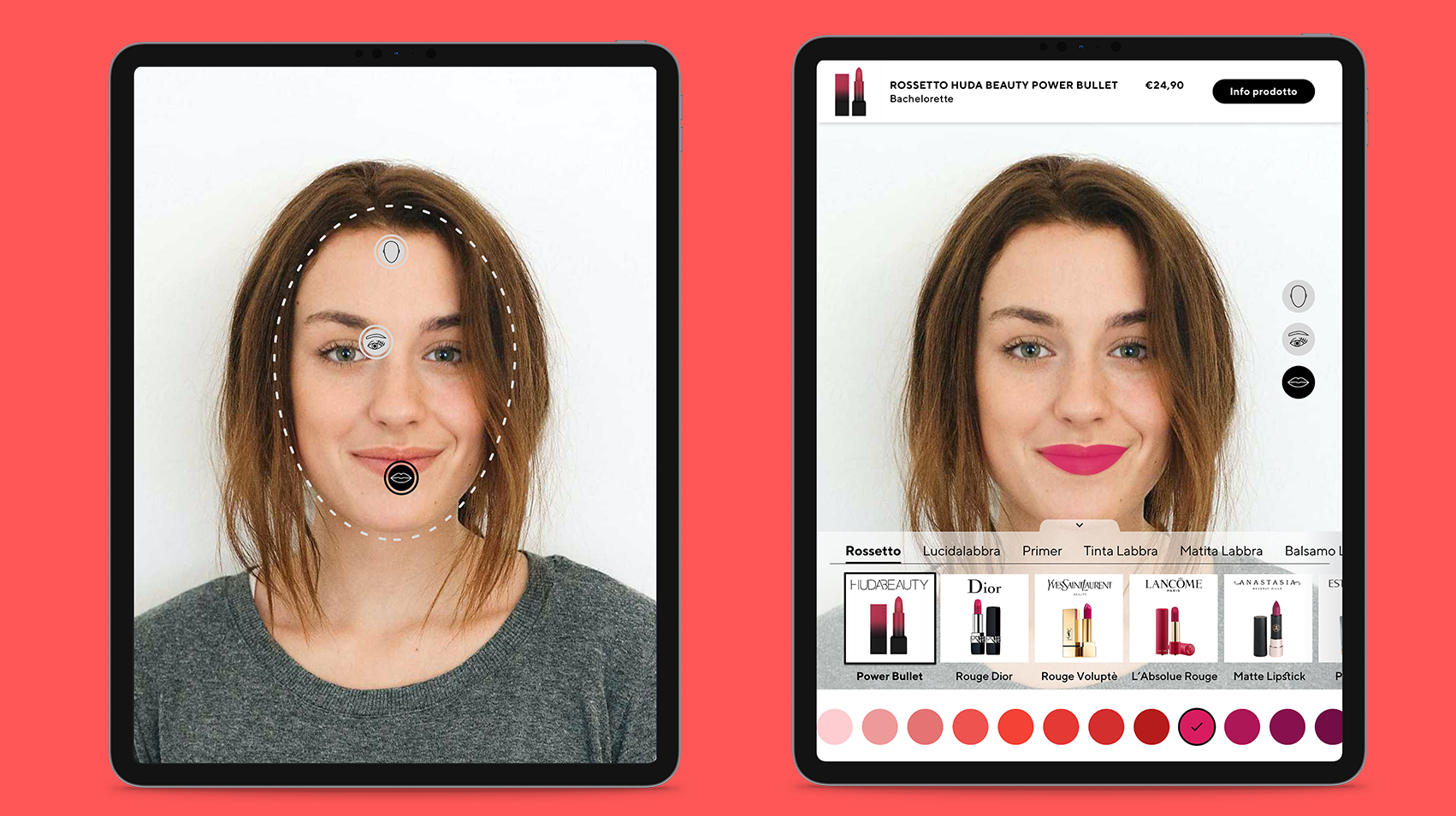

A digital product for the Virtual Try On of cosmetics through facial recognition software and an in-depth study of UX and UI.

Virtual Try On of beauty products

The context and the market need

The recent events related to Covid-19 have forced cosmetic products stores to review their sales process, forcing them to distort the costumer experience. In the shop, in fact, it was customary to use samples and test products that today can be a vehicle of new Coronavirus infections, and therefore prohibited. Among the various solutions that commercial activities can put in place to deal with this phenomenon, we have explored the opportunities offered by technology.

We first conducted a user study to capture all the nuances of the buying process, not only the mere test of makeup but also the unconscious psychological aspects that are activated during the experience in the store. For decades the users have been used to a certain shopping experience and changing it might have dangerous repercussions for the business.

Planning and Design Thinking

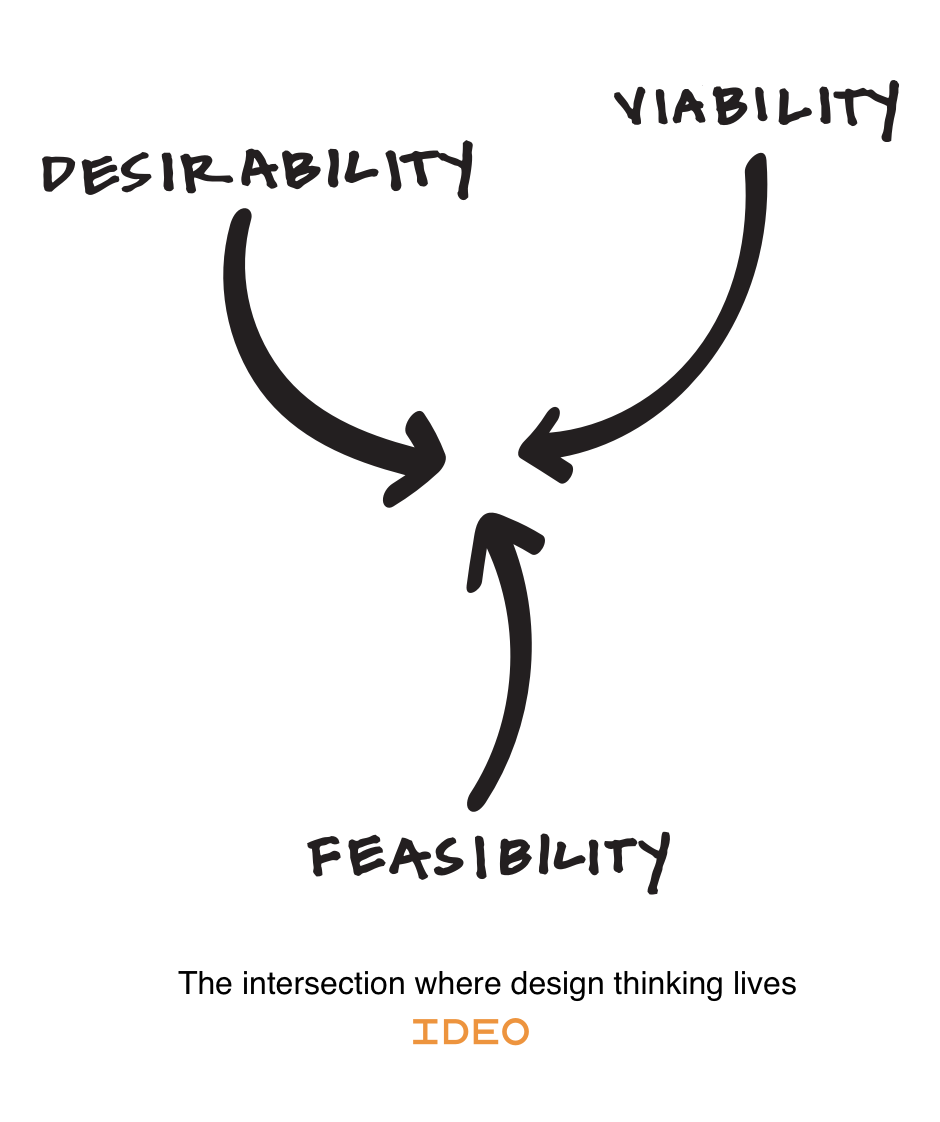

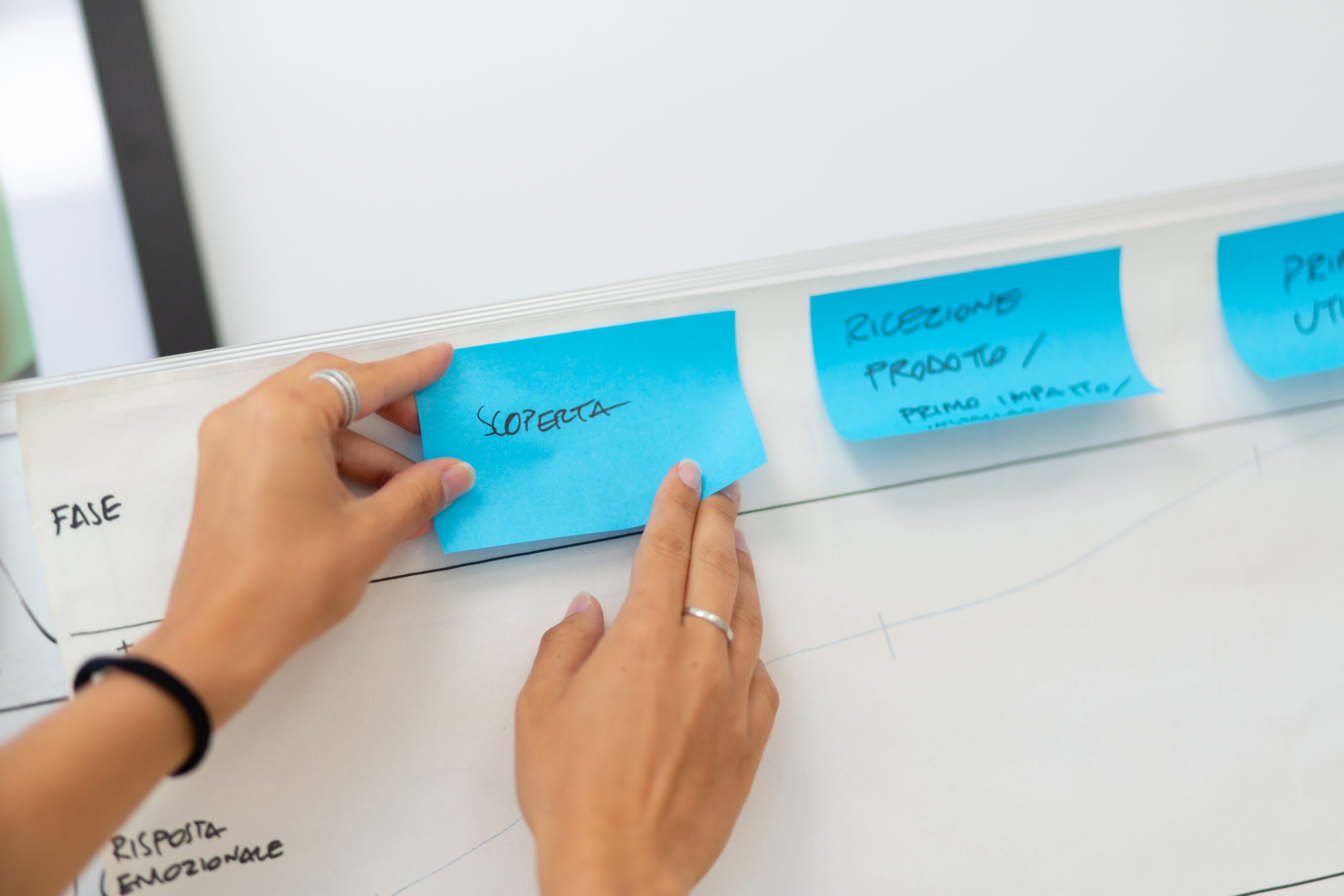

To conduct this analysis, we have adopted the typical tools of Design Thinking, condensed into five days of intense work.

It’s enlightening the definition of Design Thinking shared by IDEO: “Design thinking is a human-centred approach to innovation that draws from the designer’s toolkit to integrate the needs of people, the possibilities of technology, and the requirements for business success.” —TIM BROWN, EXECUTIVE CHAIR OF IDEO

Design Thinking helped us to clarify and set solid principles from which to build functional solutions.

There are 5 steps, which are the classical Design Thinking moments:

- Empathise – the first step, the team empathise, learn and gets closer to the users

- Define – in this step the team defines users’ needs and their problems. This is a very important step as the problems need to be transformed into opportunities.

- Ideate – starting to generate ideas and solutions challenging initial and personal assumptions

- Prototype – the best idea responding to the problem is being identified and we can start developing a prototype to build solutions

- Test – the last step where we challenge different solutions testing them with the final users

Translating this flow of activities, here the tasks that the design team has worked on:

- User Research

- User flow

- Moodboard (visual research)

- Mock-up + Prototype

- User Testing

User Research

In the first step, we have started with the users’ research, doing some phone interview on a sample of 15 people of different ages. The goal was to collect information on their approach to buying make-up and their experience and open mind approach towards a Virtual Try-On app.

Then the results have been organised in specific files summing up each person, useful to define the users’ flow based on their real needs.

Specifically, it’s interesting understanding that from the user research we can group the interviewers in two types of users:

- People really expert and informed, ready to make a decision to buy a specific make-up even if they can’t try it on the skin

- People less interested, who wouldn’t be able to make a decision without trying the make-up on the skin several times

User flow

In this step is important defining the users’ flow in order to provide a flawless and effective experience for the Minimum viable product.

In particular, we have used flowmapp to create and define the user flow. From the research phase and from the tech team we agreed to use a lipstick from a specific beauty brand.

Moodboard

There already are some Virtual Try-On apps in the market, with their weaknesses and strengths. The world of make-up is full of colours and light effects, so our colour palette had to use light and neutral ones to not get in contrast with the lipstick colours. We have then chosen a Font (TT Norms) with a feeling simple and geometric, giving good readability. In this way, we have been able to conduct a “visual brainstorming” which has helped us to explore all the possible options and ideas.

Mock-up and prototype

With all those elements we’ve passed to the prototype. Using Sketch and InVision we have developed the mock-ups, the graphic representation of our user interface, focusing on the lips. For every corner of the face, we have designed some icons which they initially serve to recognise the face and they will help the user for the rest of the experience, allowing the choice of the different type of lipsticks, colours, mate trying out.

User Testing

The final step has been to validate the design with a selected amount of people. The goal of the User Testing is to define the details and understand if there’s something not very clear from a user perspective to iterate the design and fix the problem before the final development of the app.

Augmented Reality, Virtual Try On, Face Recognition: how much technology!

Right from the start of the sprint, the main goal was clear to everyone: to create realistic graphics in a virtual makeup app.

In augmented reality applications, realism is fundamental, especially if the user has to interact with elements of AR. In addition to realism, we decided to focus on a secondary objective: looking for alternatives to using ARKit (SDK to develop Apple’s AR).

It is therefore important to study the current market and analyze different solutions to develop virtual makeup.

For this project, the development team split up into two teams: one part working with Unity and ARKit, developing an application for iPad Pro that provides, in addition to good tracking and graphical realism, also high quality UX and UI. The second part of the team was in charge of studying alternative solutions, in this case it was decided they would develop the virtual makeup with Facebook’s SparkAR.

ARKit

ARKit allows us to trace the face of a user and recreate a 3D model of the face in real-time, so we isolate the area of the lips. Using Unity you can apply a 3D model to a texture with transparency, which makes the model “invisible” where we want it to be. This is the result:

We made the face invisible, showing only the user’s lips. The next step was to graphically simulate the lipstick, to do so we used PBR (Physically Based Rendering) materials. These materials allow us to make a surface more or less shiny and reflective and allows us to simulate wrinkles and irregularities. This last point is very important to simulate the movement of the lips. Starting from the previous image, we were able to generate a very good Normal Map.

Thanks to Light Estimation, we recreated a realistic illumination on the virtual surfaces based on the real light perceived by the camera of the device.

The post-processing continued with targeted interventions:

- Grain: the grain allows us to add “noise” on the screen, this is very useful to homogenize virtual and real elements because often the camera device shows “noisy” real elements and “perfect” virtual elements.

- Bloom: the bloom allows us to increase the intensity of the reflection. This helps us a lot, since we want to show the reflections of glossy lipsticks very well.

- Vignettes: This effect creates a shading effect at the edges of the screen, allowing us to keep our concentration in the center of the screen. It’s a non-invasive effect, but very effective.

- Color Grading: The color grading allows us to make changes to the colors, such as increasing the temperature, increasing the contrast and so on. In this situation it is useful to slightly increase the contrast and color saturation, to make the scene slightly livelier.

SparkAR

Spark AR studio is the framework to create AR filters for Facebook and Instagram. The creation system is limited compared to editors like Unity and provides a basic set of features to work with.

Interestingly, face tracking is very advanced; this works by simply analyzing the phone’s camera feed.

The interactions in Spark AR studio are created by means of graphic language via a node.

The end result is a mono-brand filter that you can use directly on your phone, within the Facebook and Instagram app. Users can access the content simply by scanning a QR code or following a URL.

The user can choose the color of the lipstick by selecting one of the colors on the right side of the screen. With Spark AR there is an added possibility of taking snapshots or recording videos that are saved directly on your device. From the point of view of distribution, this choice gives the opportunity of having a very fast system for updates and use.

The limits of the two approaches

As for ARKit, during the development of the app we discovered that the tracking is limited. There are situations and cases where lip tracking is not accurate. This is based on the lighting in the room, the shape of the person’s face, whether the person has a beard and other factors. However, we can assume that Apple will improve the quality of ARKit in the future, with much more accurate tracking.

The limits of SparkAR mainly concern the User Interface: the system is very limited and hardly supports the various phone resolutions. For this reason, we decided to modify the interface with a simpler and functional version for dynamic adaptation to the resolution of the various device screens.

What will the future hold for us?

The results obtained were more than satisfactory for both ARKit and SparkAR. We were able to implement the design team’s vision, resulting in a simple and easy to use app that delivers high quality AR content.

A tool of this kind allows all the players in the world of cosmetic to let their customers try their products, without the need to apply them directly on the face, with the consequent decrease in the costs of samples and various equipment and an increase in in-store hygiene. The last aspect is anything but secondary nowadays.

New technologies are our superpower and they help companies in the transition toward the future. Other successful cases are the projects made for Safilo, Università di Padova and Tecnolaser.

Are you interested in these projects’ insights? We share them with the subscribers to our Exploratorium, find out more about this experimental lab clicking here 👉 https://exploratorium.uqido.com/

WHAT’S EXPLORATORIUM

Exploratorium is Uqido’s laboratory where every week we project, develop and launch on the market a new digital product.

Made of developers, designers and marketing strategists coming from places like IDEO, YCombinator, Sony and M31, our MVP Lab creates and launch every week a new prototype and, with techniques of Growth Hacking, Lean methodologies and Design Sprint, it collects the feedbacks of the market.